Reaper for Apache Cassandra 2.1 was released with Astra support

It’s been a while since the last feature release of Reaper for Apache Cassandra and we’re happy to announce that v2.1 is now available for download!

Let’s take a look at the main changes that were brought by the Reaper community.

Contents

- Lighter load on Cassandra

- New default number of segments per node

- GUI improvements

- Docker improvements

- In database JMX credentials

- Astra support

New features and improvements

Ligther Load on the Cassandra Backend

It was observed and measured that Reaper could send a lot of queries to the Cassandra backend, especially as repair runs history gets big. This came from regular scans made for maintenance reasons on repair runs in some specific states. As we didn’t store the state on the index table we use to list all repair runs for a cluster, this was forcing us to scan all the repair runs and filter them by state on the client side. This was fixed by using a new table which stores the state and will limit the number of reads performed on the Cassandra backend by an order of magnitude for heavily used Reaper instances.

Another observation was that Reaper was performing a fair bit of overhead for both its ability to work in a distributed (highly available) mode, and in listening to and displaying Diagnostic Events coming out of any Apache Cassandra 4.0 clusters. Both these features now start in a sleep-and-watch mode that uses very little resources and only lazy initialise themselves when it is seen to be appropriate.

New Default Number of Segments Per Node

The segment per node setting and how it relates to Reaper’s handling of stuck repairs is one of the most confusing aspects of this tool. By default, a segment that runs for more than 30 minutes will be aborted by Reaper and rescheduled at a later time. The fail count of the aborted segment will be incremented in the process.

Reaper doesn’t make it easy in its current form to find out when this happens. Some repairs can take a very long time with slow progress because the number of segments is improperly set. Worse, repeated termination of segments will trigger CASSANDRA-15902 which was recently identified and is being patched. Cassandra doesn’t properly clean up repair sessions when they are force terminated through JMX, eventually leading to heap pressure and OOM in extreme cases. When using Datacenter Aware or Sequential parallelism for repairs, ephemeral snapshots created by Cassandra will not get cleaned up after the early termination of the repairs. It is worth noting that these are special snapshots which get deleted during Cassandra’s startup sequence. A rolling restart will then get rid of all the leftover snapshots across a cluster.

The default number of segments per node before was 16, which proved to be too low for many clusters which struggled with slow progress and repeated timeouts of segments. In 2.1, we incremented the default to 64 which will highly reduce the odds of getting timeouts on most clusters. As cluster sizes, densities and hardware vary a lot, we advise to monitor repairs by checking the segment list in search of high fail counts and segment processing durations.

Some helpful tuning advices:

- If only a few segments go over the timeout, increase the

hangingRepairTimeoutMinsvalue in your/etc/cassandra-reaper/cassandra-reaper.yamlfile and restart Reaper. - If many segments go over the timeout, increase the number of segments per node and try to keep segments processing duration below 20 minutes.

GUI improvements

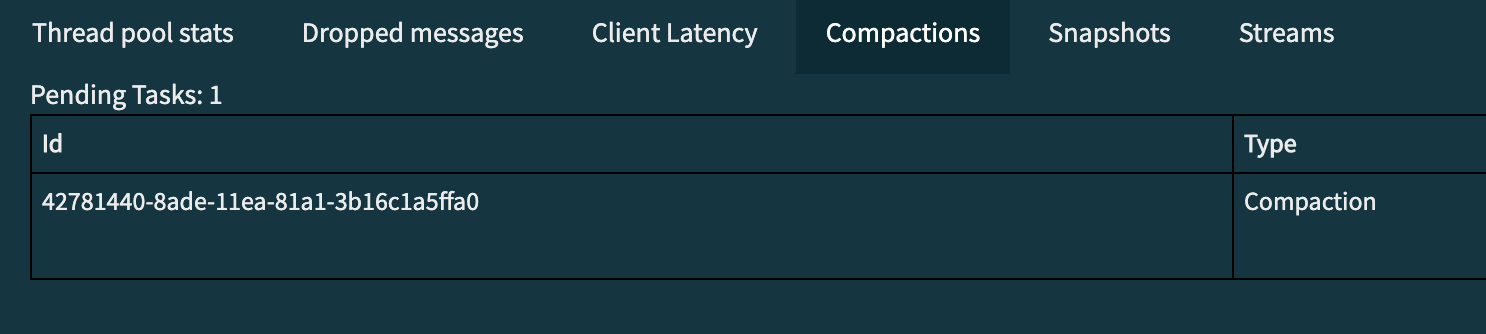

The number of pending compactions was added to the compactions tab when displaying node details, which was a big missing information in that screen. It is very convenient to be able to check running compactions from the GUI and get a feel for the compaction backlog without having to ssh into the nodes and run nodetool commmands:

Our React stack went through a well deserved refresh and was upgraded to React 16, which will help Reaper contributors use up to date dependencies and components.

Docker improvements

Automatic cluster registration was added to the Docker image for better automation. Check the documentation for more informations on how to use this feature with docker-compose.

JMX credentials were made configurable in the Docker image and spreaper CLI was added to it.

In database JMX Credentials

So far, Reaper had a clunky and unsecured way of storing JMX credentials for managed clusters: you had to store them in Reaper’s yaml configuration file in plain text. Yes, that was not ideal.

Reaper 2.1 now allows to store JMX credentials in the database in encrypted form using a symmetric key which will need to be set in an environment variable.

In order to use this feature, first uncomment (or add) the following lines in your cassandra-reaper.yaml file:

cryptograph:

type: symmetric

systemPropertySecret: REAPER_JMX_KEY

Change the systemPropertySecret to the name of the environment variable you’ll set with the cryptographic key value.

In this case for example: export REAPER_JMX_KEY=MyEncryptionKeyForReaper21

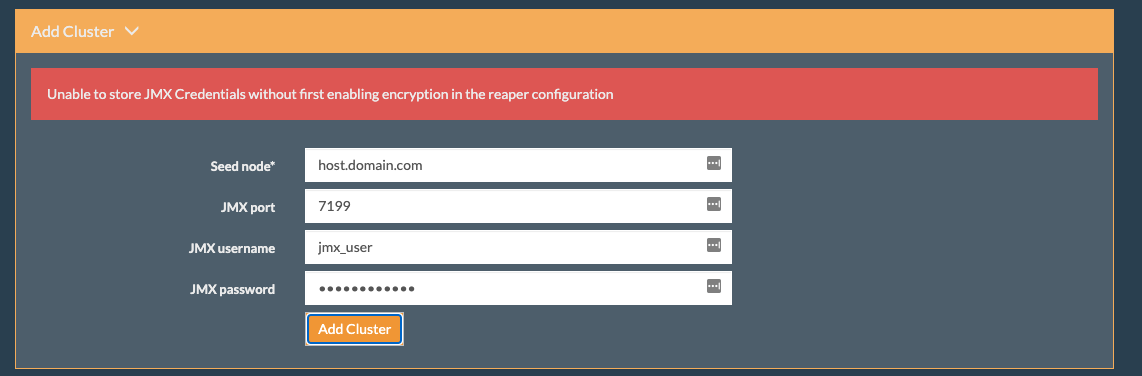

Now restart Reaper and then register a cluster with JMX credentials straight from the UI.

Also, store that encryption key somewhere safe ;)

Reaper will prevent from registering clusters with JMX credentials if the cryptograph settings aren’t correctly set in configuration:

Astra support

DataStax Astra is a database-as-a-service (DBaaS) for Apache Cassandra. It is available on AWS, GCP and Azure.

Setting up Reaper for non testing use requires to use a persistent storage backend. Currently, Reaper supports h2, postgres and Cassandra. When using Cassandra, the following question usually arises: Should I store Reaper’s data on the cluster I’m repairing or should I spin up a separate cluster for that use?

Astra to the rescue! Check our blog post on setting up Reaper with Astra as a backend.

The best part of it is that most Reaper setups will fit into the free tier!

Upgrade now

We recommend all users to upgrade to Reaper for Apache Cassandra 2.1, report issues on the GitHub project and welcome contributions through PRs!