Cassandra Reaper 2.0 was released

Cassandra Reaper 2.0 was released a few days ago, bringing the (long awaited) sidecar mode along with a refreshed UI. It also features support for Apache Cassandra 4.0, diagnostic events and thanks to our new committer, Saleil Bhat, Postgres can now be used for all distributed modes of Reaper deployments, including sidecar.

Sidecar mode

By default and for security reasons, Apache Cassandra restricts JMX access to the local machine, blocking any external request.

Reaper relies heavily on JMX communications when discovering a cluster, starting repairs and monitoring metrics. In order to use Reaper, ops has to change Cassandra’s default configuration to allow external JMX access, potentially breaking existing company security policies. All this makes unnecessary burden on Reaper’s out-of-the-box experience.

With its 2.0 release, Reaper can now be installed as a sidecar to the Cassandra process and communicate locally only, coordinating with other Reaper instances through the storage backend exclusively.

At the risk of stating the obvious, this means that all nodes in the cluster must have a Reaper sidecar running so that repairs can be processed.

In sidecar mode, several Reaper instances are likely to be started at the same time, which could lead to schema disagreement. We’ve contributed to the migration library Reaper uses and added a consensus mechanism based on LWTs to only allow a single process to migrate a keyspace at once.

Also, since Reaper can only communicate with a single node in this mode, clusters in sidecar are automatically added to Reaper upon startup. This allowed us to seamlessly deploy Reaper in clusters generated by the latest versions of tlp-cluster.

A few limitations and caveats of the sidecar in 2.0:

- Reaper clusters are isolated and you cannot manage several Cassandra clusters with a single Reaper cluster.

- Authentication to the Reaper UI/backend cannot be shared among Reaper instances, which will make load balancing hard to implement.

- Snapshots are not supported.

- The default memory settings of Reaper will probably be too high (2G heap) for the sidecar and should be lowered in order to limit the impact of Reaper on the nodes.

Postgres for distributed modes

So far, we had only implemented the possibility of starting multiple Reaper instances at once when using Apache Cassandra as storage backend.

We were happy to receive a contribution from Saleil Bhat allowing Postgres for deployments with multiple Reaper instances, which also allows it to be used for sidecar setups.

As recognition for the hard work on this feature, we welcome Saleil as a committer on the project.

Apache Cassandra 4.0 support

Cassandra 4.0 is now available as an alpha release and there have been many changes we needed to support in Reaper. It is now fully operational and we will keep working on embracing 4.0 new features and enhancements.

Reaper can now listen and display in real-time live diagnostic events transmitted by Cassandra nodes. More background informations can be found in CASSANDRA-12944, and stay tuned for an upcoming TLP blog post on this exciting feature.

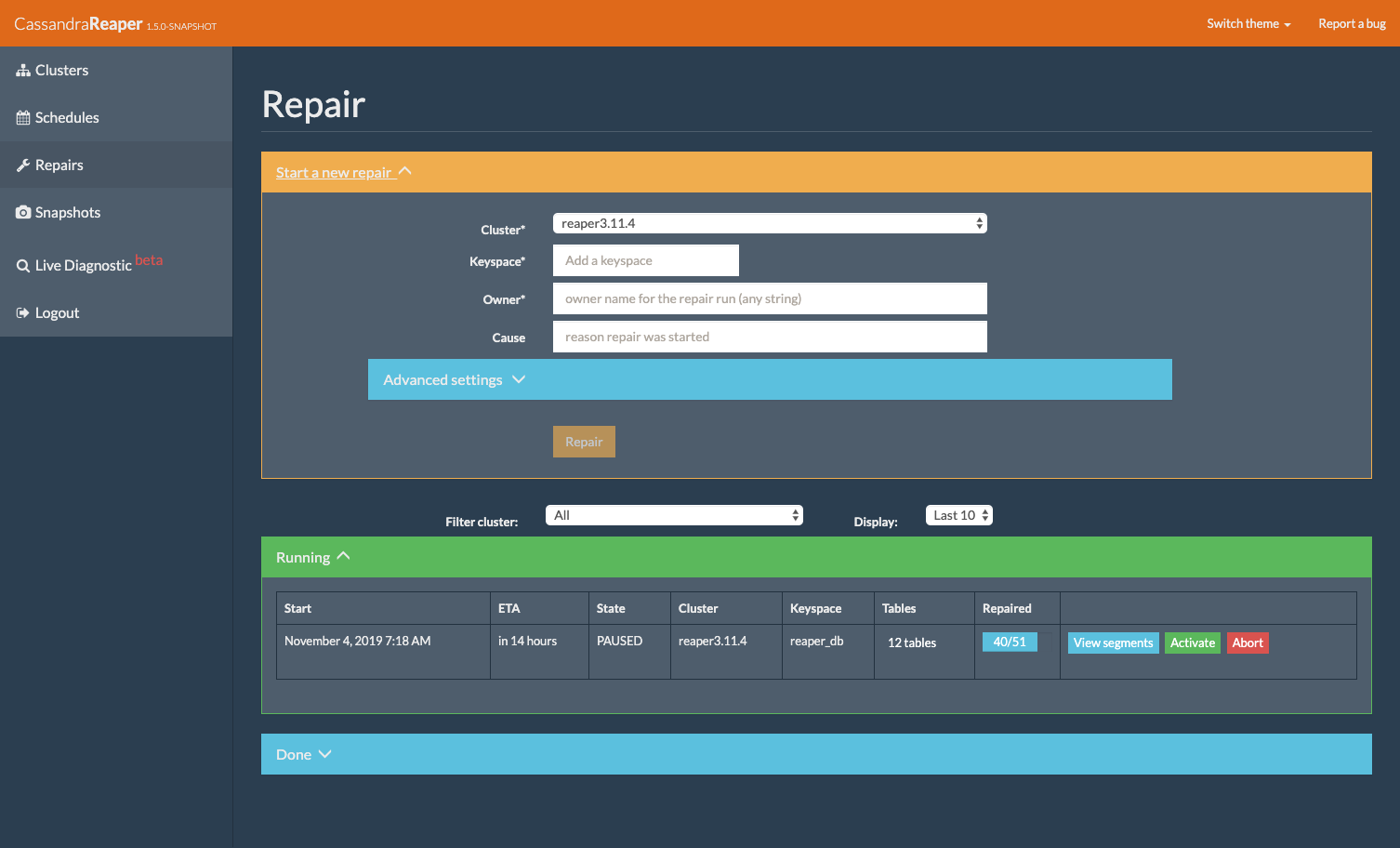

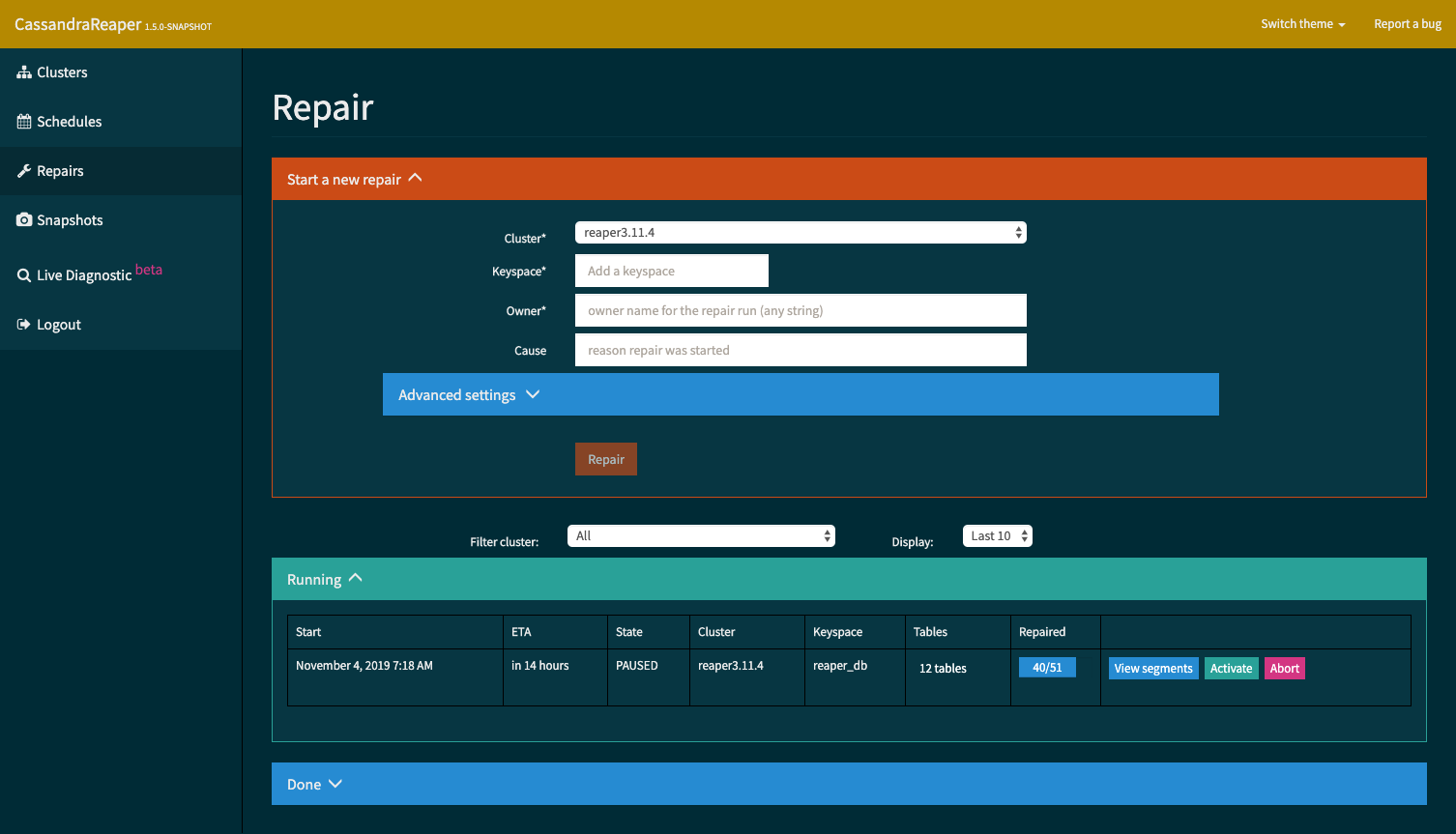

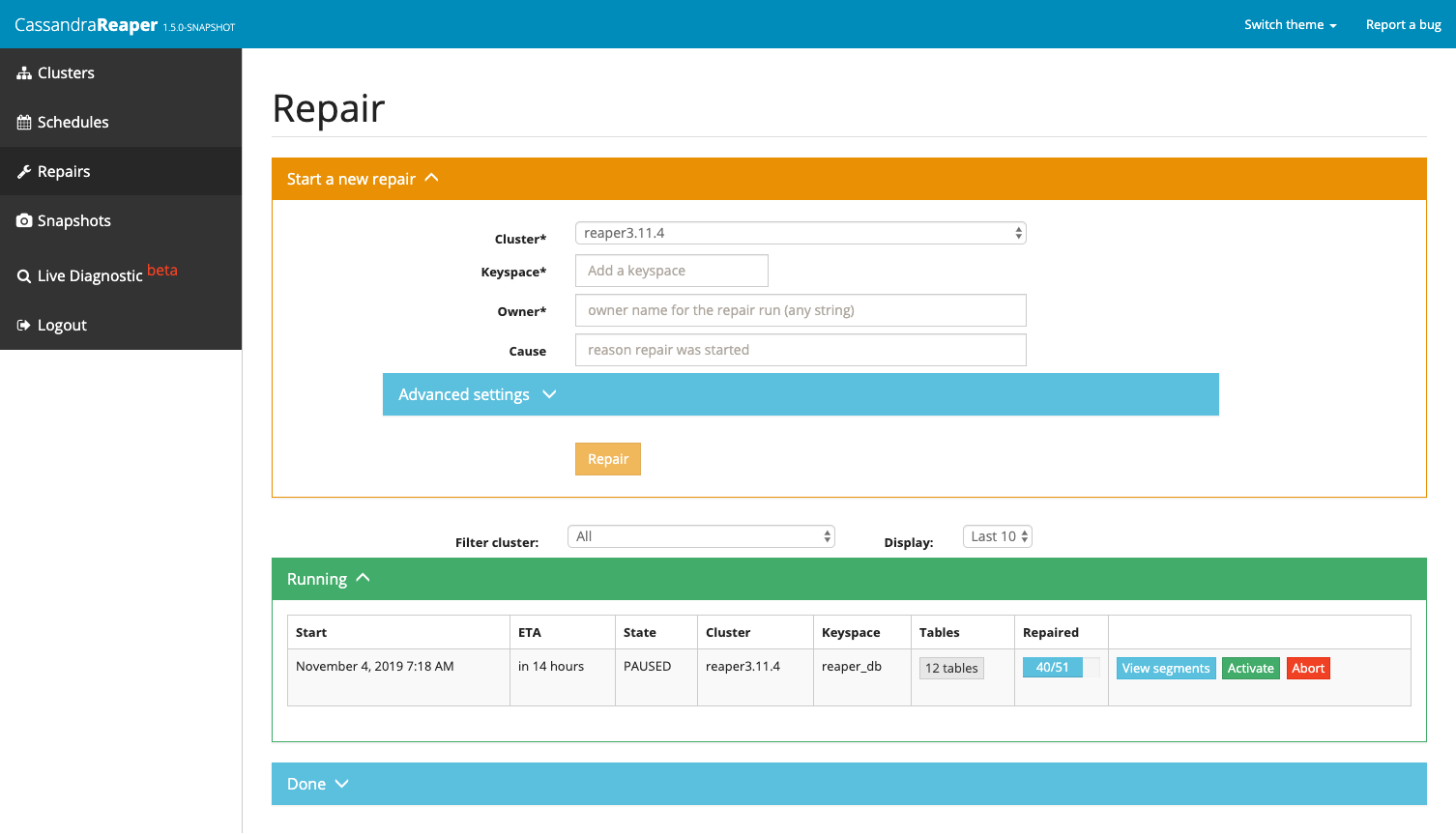

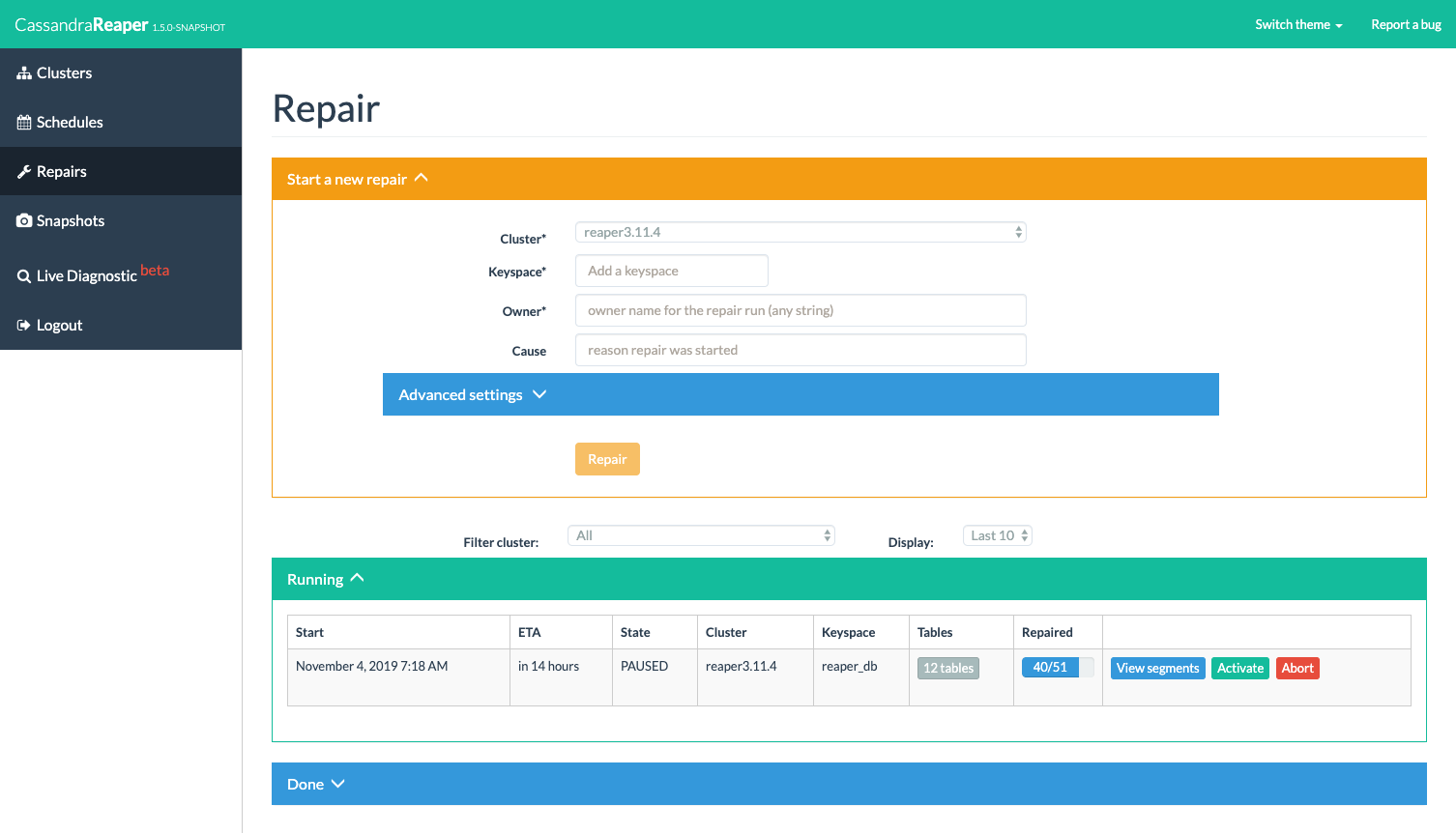

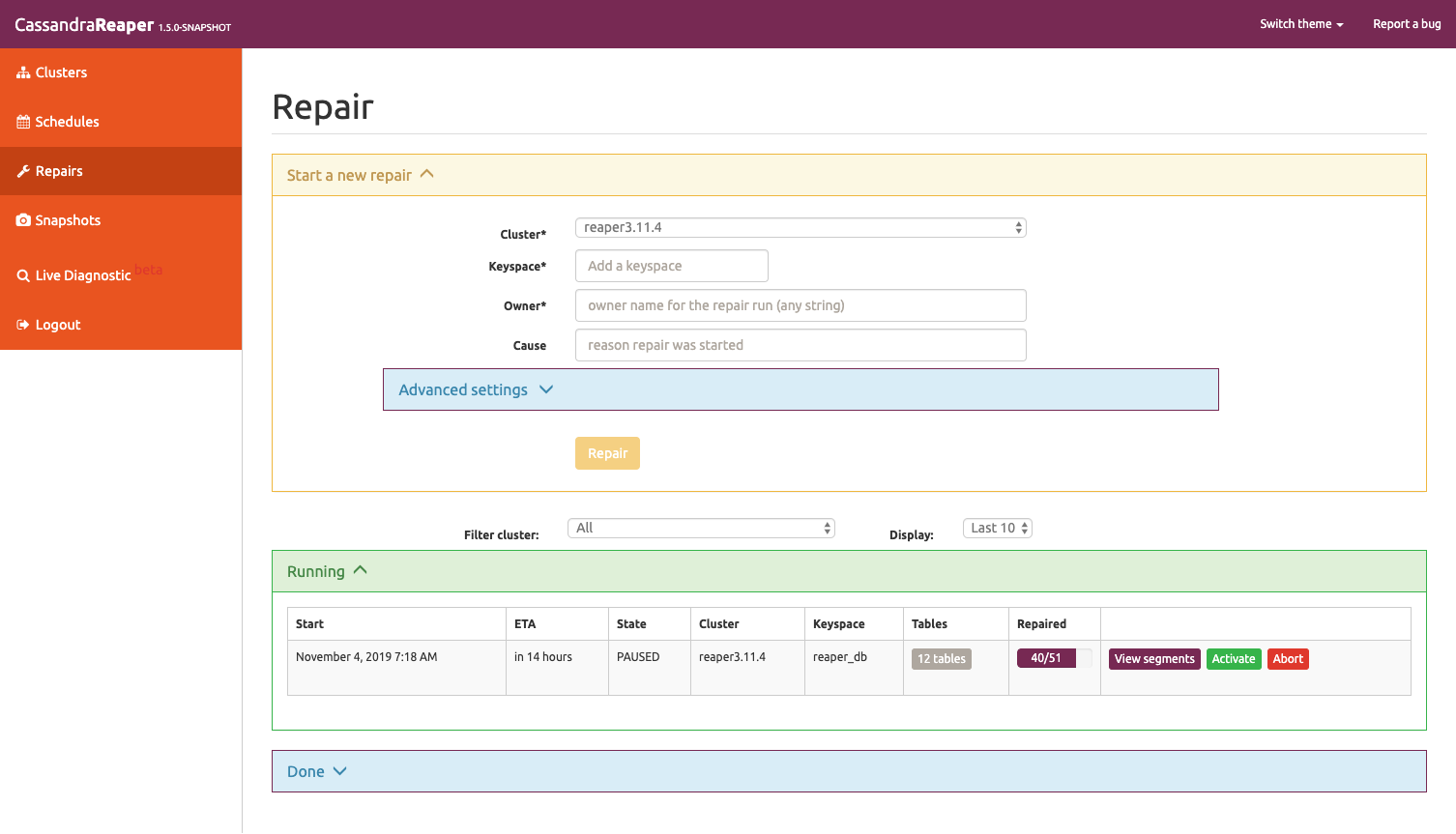

Refreshed UI look

While the UI look of Reaper is not as important as its core features, we’re trying to make Reaper as pleasant to use as possible. Reaper 2.0 now brings five UI themes that can be switched from the dropdown menu. You’ll have the choice between 2 dark themes and 3 light themes, which were all partially generated using this online tool.

And more

The docker image was improved to avoid running Reaper as root and to allow disabling authentication, thanks to contributions from Miguel and Andrej.

The REST API and spreaper can now forcefully delete a cluster which still has schedules or repair runs in history, making it easier to remove obsolete clusters, without having to delete each run in history.

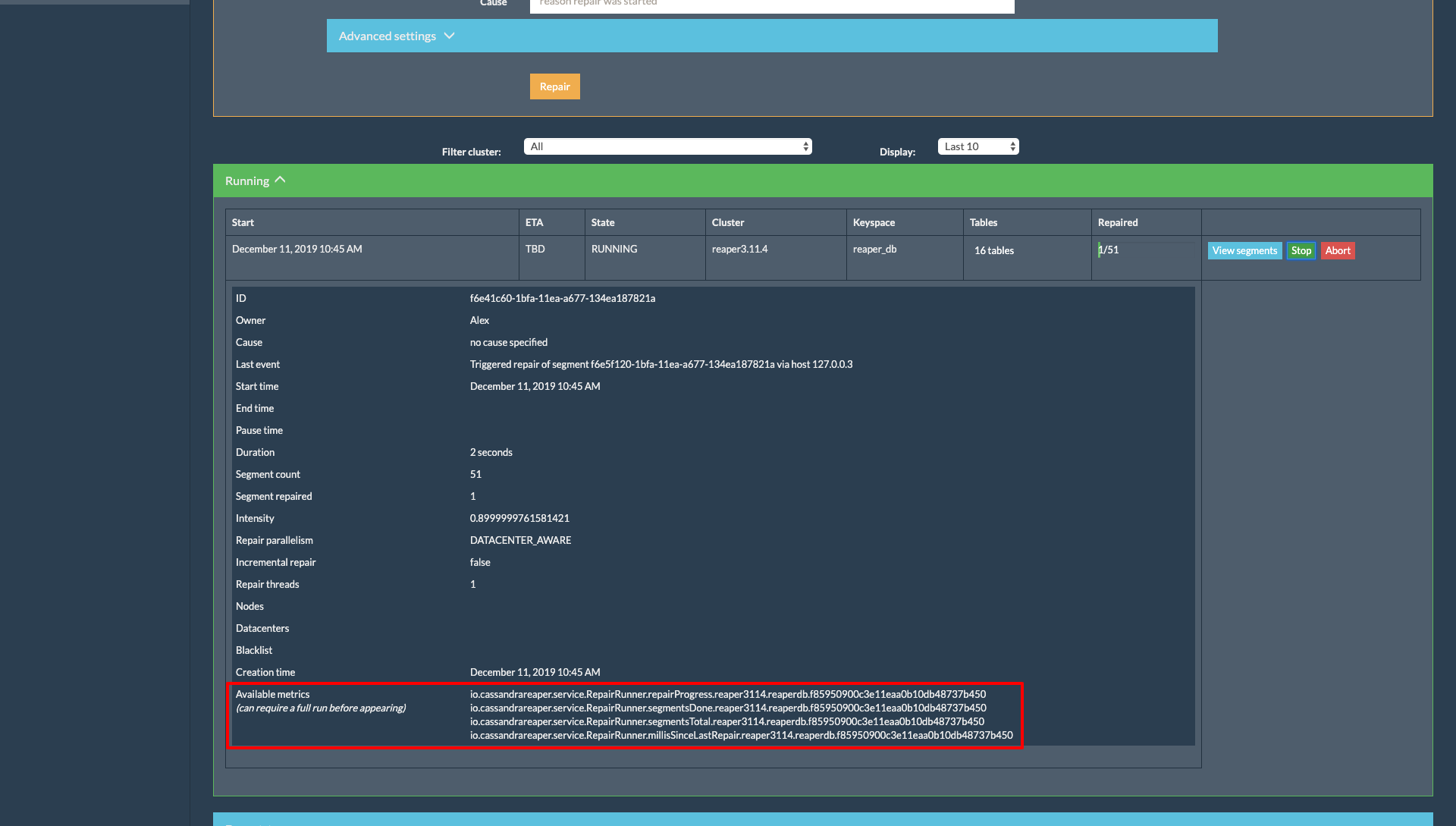

Metrics naming was adjusted to improve tracking repair state on a keyspace. They are now provided in the UI, in the repair run detail panel:

Also, Inactive/unreachable clusters will appear last in the cluster list, ensuring active clusters display quickly. Lastly, we brought various performance improvements, especially for Reaper installations with many registered clusters.

Upgrade now

In order to reduce the initial startup of Reaper and as we were starting to have a lot of small schema migrations, we collapsed the initial migration to cover the schema up to Reaper 1.2.2. This means upgrades to Reaper 2.0 are possible from Reaper 1.2.2 and onwards, if you are using Apache Cassandra as storage backend.

The binaries for Reaper 2.0 are available from yum, apt-get, Maven Central, Docker Hub, and are also downloadable as tarball packages. Remember to backup your database before starting the upgrade.

All instructions to download, install, configure, and use Reaper 1.4 are available on the Reaper website.

Note: the docker image in 2.0 seems to be broken currently and we’re actively working on a fix. Sorry for the inconvenience.