Filtering which measurements the metrics reporter sends

The amount of metrics your platform is collecting can overwhelm a metrics system. This is a common problem as many of today’s metrics solutions like Graphite do not scale successfully. If you don’t have the option to use a metrics backend that can scale, like DataDog, you’re left trying to find a way to cut back the number of metrics you’re collecting. This blog goes through some customisations that provide improvements alleviating Graphite’s inability to scale. It describes how to install and use customisations made to the metrics and metrics-reporter-config libraries used in Cassandra.

Therefore this blog post is intended for developers familiar with operating a Cassandra cluster and sending metrics to Graphite. If you’re not yet familiar with monitoring Cassandra read this

How the Metrics Reporter works

Rather than re-invent the wheel explaining how metrics are configured and work in Cassandra let’s repeat the community’s documentation here.

Metrics in Cassandra are managed using the Dropwizard Metrics library. These metrics can be queried via JMX or pushed to external monitoring systems using a number of built in and third party reporter plugins. … The configuration of these plugins is managed by the metrics reporter config project. There is a sample configuration file located at conf/metrics-reporter-config-sample.yaml.

Once configured, you simply start cassandra with the flag -Dcassandra.metricsReporterConfigFile=metrics-reporter-config.yaml. The specified .yaml file plus any 3rd party reporter jars must all be in Cassandra’s classpath.

– from Cassandra’s Monitoring documentation

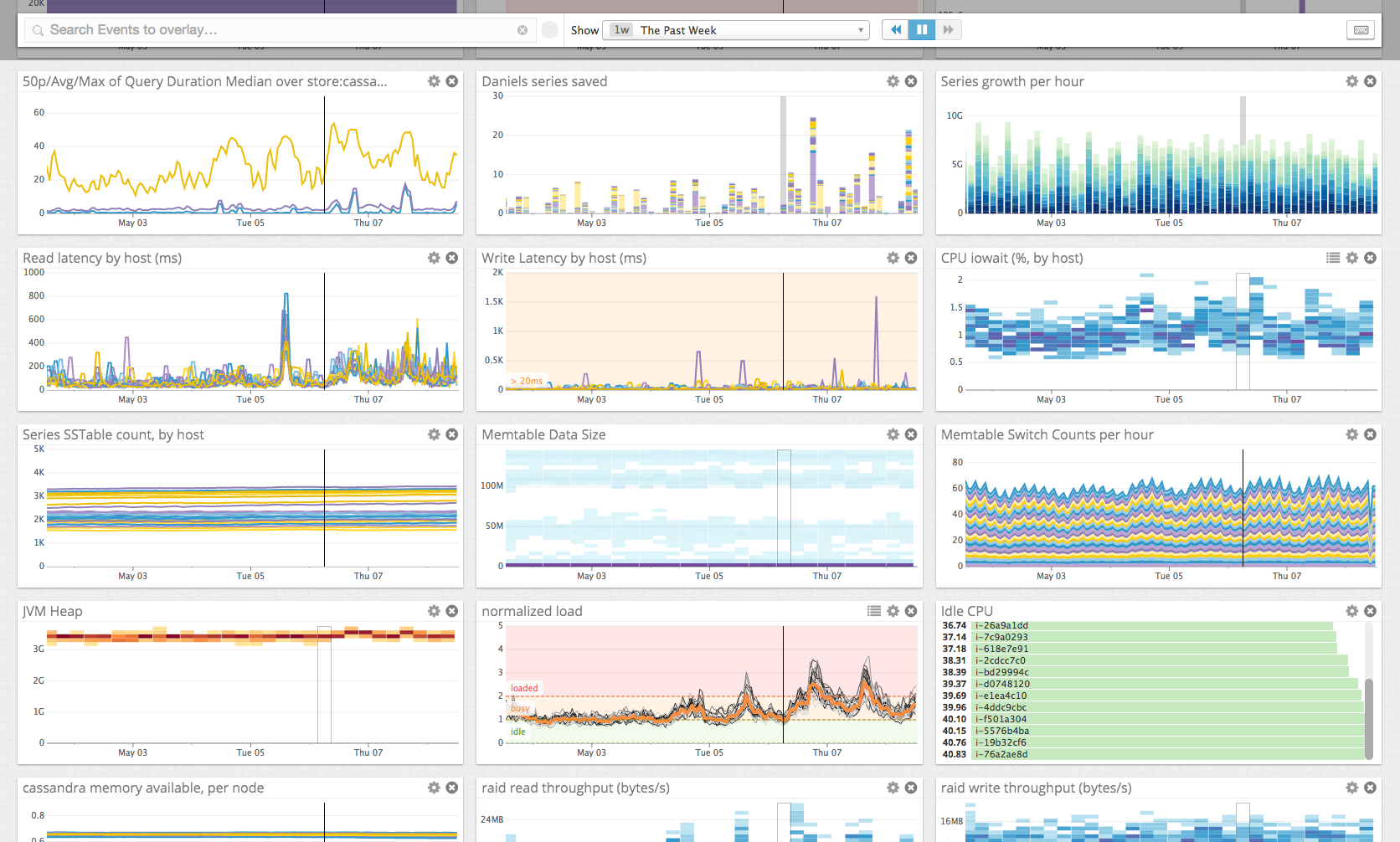

Scaling a successful Cassandra cluster can cause metrics overload problems. They can occur all too quickly and suddenly, especially so when all metrics are aggregated on one backend metrics server.

An overload of data sent to the backend metrics server will manifest with some metrics failing to appear in the UI. This can be from dropped UDP packets, failed send requests to the backend, or failures to aggregate the volume of data in the backend. If a queue or messaging system is relaying the metric signals onto the backend it can manifest as only old metrics being displayed in the UI.

Filtering Metrics

The obvious first approach to address such an issue is to reduce the number of metrics you’re collected. Teams often just measure everything to begin with, and there’s plenty of wisdom in doing so. But with Cassandra there’s a lot of metrics you don’t need, given you apply a little critical thinking. There’s obvious metrics to measure, for example The Last Pickle recommends the really crucial metrics to be: dropped messages, failed requests, gc times, latencies, pending and blocked thread pools, pending compactions, sstables per read, and tombstones per read. These metrics cover the typical situations for availability and detecting early when you need to scale out (add more nodes to the C* cluster). For further application and cluster performance the metrics you choose to measure will be based on domain knowledge, past exploration, and use of metrics.

Filtering metrics is done through the configuration file defined and used by the metrics reporter config project, by editing the predicate patterns regular expression list.

Edit $CASSANDRA_HOME/conf/graphite.yaml

graphite:

-

period: 2

timeunit: 'SECONDS'

prefix: 'cassandra'

hosts:

- host: 'localhost'

port: 2003

predicate:

color: "white"

useQualifiedName: true

patterns:

- "^org.apache.cassandra.metrics.ColumnFamily.system.schema_columns.+"

- "^org.apache.cassandra.metrics.ThreadPools.+"

- "^jvm.gc.+"

Filtering Measurements

What if you’re still overwhelming your metrics backend after cutting out all the metrics you deemed unnecessary?

There is a lot of measurements within each metric, and if we can start to filter out measurements within metrics we can probably salvage many a metrics backend. For example unneeded measurements could be “999percentile”, “mean” and “stddev” for histograms and timers, and “count” for meters.

The idea of filtering measurements within metrics is not new. It was first added to the metric reporter config back in November 2013

If you want to report only a subset of the measurements that are reported by a meter then use can use [sic] the measurement options on the predicate configuration. This feature is only available if you include as a dependency a fork of the metrics project that supports this feature, such as http://github.com/mspiegel/metrics. If the per measurement filtering is available then it is only applied once a metric has passed the top level filter.

The work to match this remains in a fork of Metrics2 by Michael Spiegel. This only existed in the metrics-reporter-config library for Metrics version 2 (Metrics2). It was never re-implemented in the metrics-reporter-config library for Metrics version 3 (Metrics3). Furthermore, no such functionality exists in the fork of the metrics repository referenced in the above README.mdown. The code in the fork has been merged with upstream commits and any original commits to provide such functionality lost. Fortunately the lost functionality has been re-implemented by The Last Pickle in Metrics3

The description on how to configure measurement filtering within each metric is described in the metric reporter config project’s README leaving the hard part as the correct installation and replacement of jar files in your Cassandra installation.

Installation Instructions for Cassandra-2.1

Cassandra-2.1 still uses Metrics2. The following instructions apply.

First build the required jar files, and upload them to every Cassandra node.

git clone git@github.com:thelastpickle/metrics.git

cd metrics

git checkout mck/v2.2.0_measurement-filter

mvn package

scp metrics-core/target/metrics-core-*-SNAPSHOT.jar <cassandra_node>:/tmp/

scp metrics-graphite/target/metrics-graphite-*-SNAPSHOT.jar <cassandra_node>:/tmp/

Then upgrade the required jar files on each Cassandra node.

nodetool stopdaemon

cd $CASSANDRA_HOME

rm lib/metrics-core-2.2.0.jar lib/metrics-graphite-2.2.0.jar

cp /tmp/metrics-core-*-SNAPSHOT.jar lib/

cp /tmp/metrics-graphite-*-SNAPSHOT.jar lib/

# start the c* node

Note the new metrics-*-2.2.0.jar files require Java8.

Installation Instructions for Cassandra-2.2+

Cassandra-2.2 onwards uses Metrics3. The following instructions apply.

First build the required jar files, and upload them to every Cassandra node.

git clone git@github.com:thelastpickle/metrics.git

cd metrics

mvn package

scp metrics-core/target/metrics-core-*-SNAPSHOT.jar <cassandra_node>:/tmp/

scp metrics-graphite/target/metrics-graphite-*-SNAPSHOT.jar <cassandra_node>:/tmp/

git clone git@github.com:thelastpickle/metrics-reporter-config.git

mvn package

scp reporter-config3/target/reporter-config3-3.0.3.jar <cassandra_node>:/tmp/

scp reporter-config-base/target/reporter-config-base-3.0.3.jar <cassandra_node>:/tmp/

Then upgrade the required jar files on each Cassandra node.

nodetool stopdaemon

cd $CASSANDRA_HOME

rm lib/metrics-core-3.1.0.jar lib/reporter-config-base-3.0.0.jar lib/reporter-config3-3.0.0.jar

cp /tmp/metrics-core-*-SNAPSHOT.jar lib/

cp /tmp/metrics-graphite-*-SNAPSHOT.jar lib/

cp /tmp/reporter-config3-3.0.3.jar lib/

cp /tmp/reporter-config-base-3.0.3.jar lib/

# start the c* node

Example configuration file

An example then of the configuration file $CASSANDRA_HOME/conf/graphite.yaml would be like:

graphite:

-

period: 2

timeunit: 'SECONDS'

prefix: 'cassandra'

hosts:

- host: 'localhost'

port: 2003

predicate:

color: "white"

useQualifiedName: true

patterns:

- "^org.apache.cassandra.metrics.ColumnFamily.system.schema_columns.+"

- "^org.apache.cassandra.metrics.ThreadPools.+"

- "^jvm.gc.+"

histogram:

color: "black"

patterns:

- metric: ".*"

measure: "999percentile|mean|stddev"

timer:

color: "black"

patterns:

- metric: ".*"

measure: "999percentile|mean|stddev"

meter:

color: "black"

patterns:

- metric: ".*"

measure: "count"

This example ensures the 999th percentile, mean and stddev measurements are excluded (because the color is set to black) on histograms and timers, and only the count measurement is collected on meters.

Unreleased Functionality

The latest version of DropWizard Metrics library has implemented a new way to deactivate specific measurements within each metric. Measurements are referred to as MetricAttribute. To deactivate the 999th percentile metric attribute do:

GraphiteReporter.forRegistry(..)

.disabledMetricAttributes(Collections.singleton(MetricAttribute.P999));

This approach is not implemented in the metrics reporter config, so expect to wait some time before seeing it available in Cassandra.

Notes

These instructions will work for Ganglia as well, replacing the use of metrics-graphite with metrics-ganglia.

If you’d like to know more about monitoring Cassandra in general check out our Alain’s awesome presentation from the last year’s Cassandra Summit 2016 conference.