Impacts of many columns in a Cassandra table

Recently, we’ve performed a health check on a cluster that was having transient performance issues. One of the main tables quickly caught our attention: it had 135 columns and latencies were suboptimal. We suspected the number of columns to be causing extra latencies and created some stress profiles to verify this theory, answering the following question: What is the impact of having lots of columns in an Apache Cassandra table?

How Many Columns Is Too Many?

To answer this, we needed to create some stress workloads that would allow configuring the number of columns in a table while maintaining a constant row size to limit the moving parts: we’re loading the same amount of data, but it is organized differently.

The tlp-stress workload that matched our customer’s use case the best was BasicTimeSeries, which just missed a few workload parameters to set the number of columns and each column min and max number of characters.

Alternate Data Modeling Options

If our theory was proven to be right, we would have to provide an alternate data model that would be efficient and remain convenient to use on the client side. Since writes in the table were immutable, using a frozen User Defined Type (UDT) seemed like a viable option. UDTs would allow to keep the data structured with strict typing. This could be more challenging with serialized JSON strings as types couldn’t be stricly set, but could work with Avro or Protobuf stored as binary objects. Making them frozen allows the whole UDT to be stored as a single cell in the storage engine, requiring that updates overwrite the whole UDT instead of individual fields.

Our BasicTimeSeries tlp-stress profile luckily already had its UDT sibling: UdtTimeSeries which was augmented to provide the same workload parameters, storing a configurable number of fields in the UDT.

Frozen Types

We need to emphasize on the use of a frozen UDT and what it means for the table update patterns.

Let’s consider the following UDT:

CREATE TYPE user_profile (

username text,

date_of_birth text,

email_address text,

phone_number text

)

When used as in a non frozen fashion (which can only be done in Cassandra 3.11) in a table like this:

CREATE TABLE users (

user_id uuid PRIMARY KEY,

profile user_profile

)

I can insert a row as follows:

> INSERT into users (user_id, profile)

VALUES (now(), {username:'alex', date_of_birth:'1977-05-08', email_address:'alex@gmail.com', phone_number:'not giving you my number'});

> SELECT * from users ;

user_id | profile

--------------------------------------+-----------------------------------------------------------------------------------------------------------------------------

78521bb0-3bd2-11eb-9787-fb51e67e2811 | {username: 'alex', date_of_birth: '1977-05-08', email_address: 'alex@gmail.com', phone_number: 'not giving you my number '}

And also update individual fields of the UDT:

> update users set profile.email_address = 'alex_deja@gmail.com' where user_id = 78521bb0-3bd2-11eb-9787-fb51e67e2811;

> select * from users;

user_id | profile

--------------------------------------+----------------------------------------------------------------------------------------------------------------------------------

78521bb0-3bd2-11eb-9787-fb51e67e2811 | {username: 'alex', date_of_birth: '1977-05-08', email_address: 'alex_deja@gmail.com', phone_number: 'not giving you my number '}

But a non frozen UDT isn’t helping us because its fields are stored as separate cells internally, which would be the same for the storage engine as storing distinct columns.

Let’s now create a table that uses the user_profile type in a frozen fashion:

CREATE TABLE frozen_users (

user_id uuid PRIMARY KEY,

profile frozen<user_profile>

)

We can still insert as we did previously:

> insert into frozen_users (user_id, profile) values(now(), {username:'alex', date_of_birth:'1977-05-08', email_address:'alex@gmail.com', phone_number:'not giving you my number '});

> select * from frozen_users;

user_id | profile

--------------------------------------+-----------------------------------------------------------------------------------------------------------------------------

7ac2d820-3bd3-11eb-9787-fb51e67e2811 | {username: 'alex', date_of_birth: '1977-05-08', email_address: 'alex@gmail.com', phone_number: 'not giving you my number '}

But updating individual fields will fail, due to the fact that our UDT is contained in a single cell in the storage engine:

> update frozen_users set profile.email_address = 'alex_deja@gmail.com' where user_id = 7ac2d820-3bd3-11eb-9787-fb51e67e2811;

InvalidRequest: Error from server: code=2200 [Invalid query] message="Invalid operation (profile.email_address = 'alex_deja@gmail.com') for frozen UDT column profile"

Using this technique will only work for immutable writes as it would impose a read before write to update individual columns which cannot guarantee the consistency of updates.

This is the same as storing a JSON blob in a text column: it would have to be updated as a whole.

UDTs (used as frozen or not) can be altered in two ways.

You can add fields by using the following ALTER statement:

> ALTER TYPE user_profile ADD additional_column text;

> desc type user_profile ;

CREATE TYPE user_profile (

username text,

date_of_birth text,

email_address text,

phone_number text,

additional_column text

);

> select * from users;

user_id | profile

--------------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------------------

78521bb0-3bd2-11eb-9787-fb51e67e2811 | {username: 'alex', date_of_birth: '1977-05-08', email_address: 'alex_deja@gmail.com', phone_number: 'not giving you my number ', additional_column: null}

You can also rename existing fields:

> ALTER TYPE user_profile RENAME additional_column to new_column_name;

> select * from users;

user_id | profile

--------------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------------------

78521bb0-3bd2-11eb-9787-fb51e67e2811 | {username: 'alex', date_of_birth: '1977-05-08', email_address: 'alex_deja@gmail.com', phone_number: 'not giving you my number ', new_column_name: null}

But you cannot drop existing fields, which is possible with regular tables where columns can be dropped from the model.

Benchmark Specifications

We ran all benchmarks on a single set of AWS instances to maintain the same conditions for all the workloads, using Apache Cassandra 3.11.9.

We used i3.2xlarge instances sporting 64 GB RAM, 8 vCPUs and a 2 TB NVMe SSD drive.

Cassandra was configured with a 24GB Heap and G1GC, and compaction throughput was raised up to 64 MB/s.

All tlp-stress workloads ran for an hour, with the following parameters:

- 50/50 Read/Write distribution

- 8 threads

- 10000 distinct partitions

- 5000 ops/s fixed rate

- Consistency Level: ONE and LOCAL_QUORUM

- SELECT statements use a

LIMIT 20clause - Asynchronous queries concurrency set to 10

- 10, 50 and 100 columns or UDT fields

- Data column/field size adjusted based on the number of columns to keep a constant size on disk throughout tests

The following commands were used to run the various workloads:

# 10 columns

tlp-stress run BasicTimeSeries -d 1h -r 0.5 -t 8 --workload.nbDataColumns=10 --csv all_columns-10.csv --workload.minChars=100 --workload.maxChars=200 --workload.limit=20 --rate 5000 -p 10000 --drop --cl LOCAL_QUORUM -c 10

# 50 columns

tlp-stress run BasicTimeSeries -d 1h -r 0.5 -t 8 --workload.nbDataColumns=50 --csv all_columns-50.csv --workload.minChars=20 --workload.maxChars=40 --workload.limit=20 --rate 5000 -p 10000 --drop --cl LOCAL_QUORUM -c 10

# 100 columns

tlp-stress run BasicTimeSeries -d 1h -r 0.5 -t 8 --workload.nbDataColumns=100 --csv all_columns-100.csv --workload.minChars=10 --workload.maxChars=20 --workload.limit=20 --rate 5000 -p 10000 --drop --cl LOCAL_QUORUM -c 10

# UDT with 10 fields

tlp-stress run UdtTimeSeries -d 1h -r 0.5 -t 8 --workload.nbDataColumns=10 --csv udts-10.csv --workload.minChars=100 --workload.maxChars=200 --workload.limit=20 --rate 5000 -p 10000 --drop --cl LOCAL_QUORUM -c 10

# UDT with 50 fields

tlp-stress run UdtTimeSeries -d 1h -r 0.5 -t 8 --workload.nbDataColumns=50 --csv udt-50.csv --workload.minChars=20 --workload.maxChars=40 --workload.limit=20 --rate 5000 -p 10000 --drop --cl LOCAL_QUORUM -c 10

# UDT with 100 columns

tlp-stress run UdtTimeSeries -d 1h -r 0.5 -t 8 --workload.nbDataColumns=100 --csv udts-100.csv --workload.minChars=10 --workload.maxChars=20 --workload.limit=20 --rate 5000 -p 10000 --drop --cl LOCAL_QUORUM -c 10

The same commands were used with a different consistency level to run tests at ONE instead.

Benchmark Results

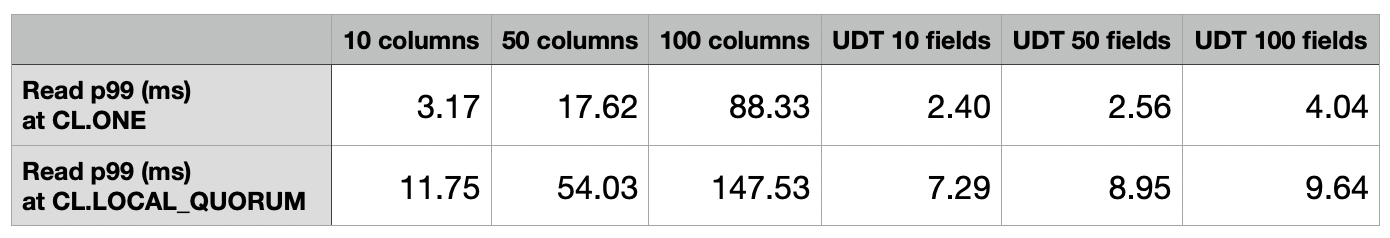

The following table and chart show the average p99 latencies for each workload:

The impact of the number of columns on latencies is massive, while the use of UDTs gave similar results whether they had 10 or 100 fields. We can observe that latencies grow almost linearly with the number of columns in the table, with approximately 5 times higher read latencies between 10 and 50 columns, and a 2.7 times growth between 50 and 100 columns.

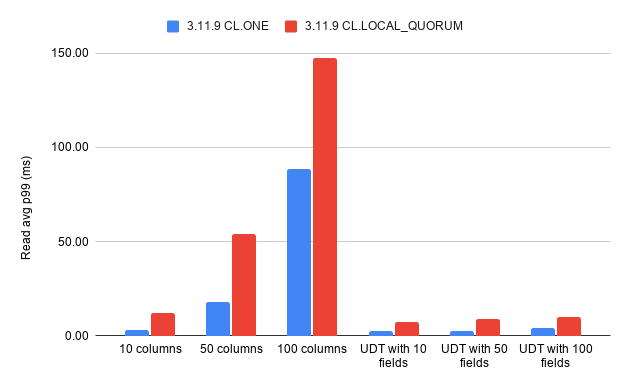

Looking at our Grafana dashboards, we see that the 100 columns test couldn’t keep up with the required fixed rate:

Since tlp-stress doesn’t support coordinated omission, it means that the 100 columns latency is even higher than reported.

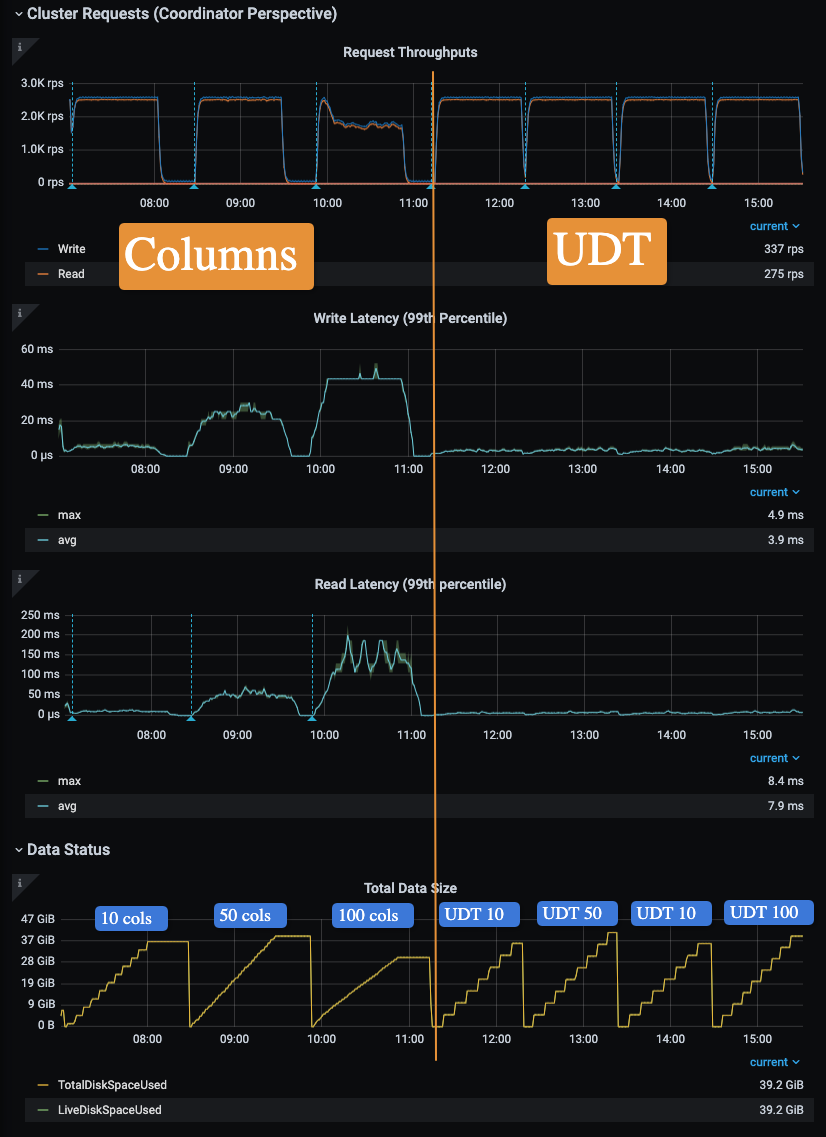

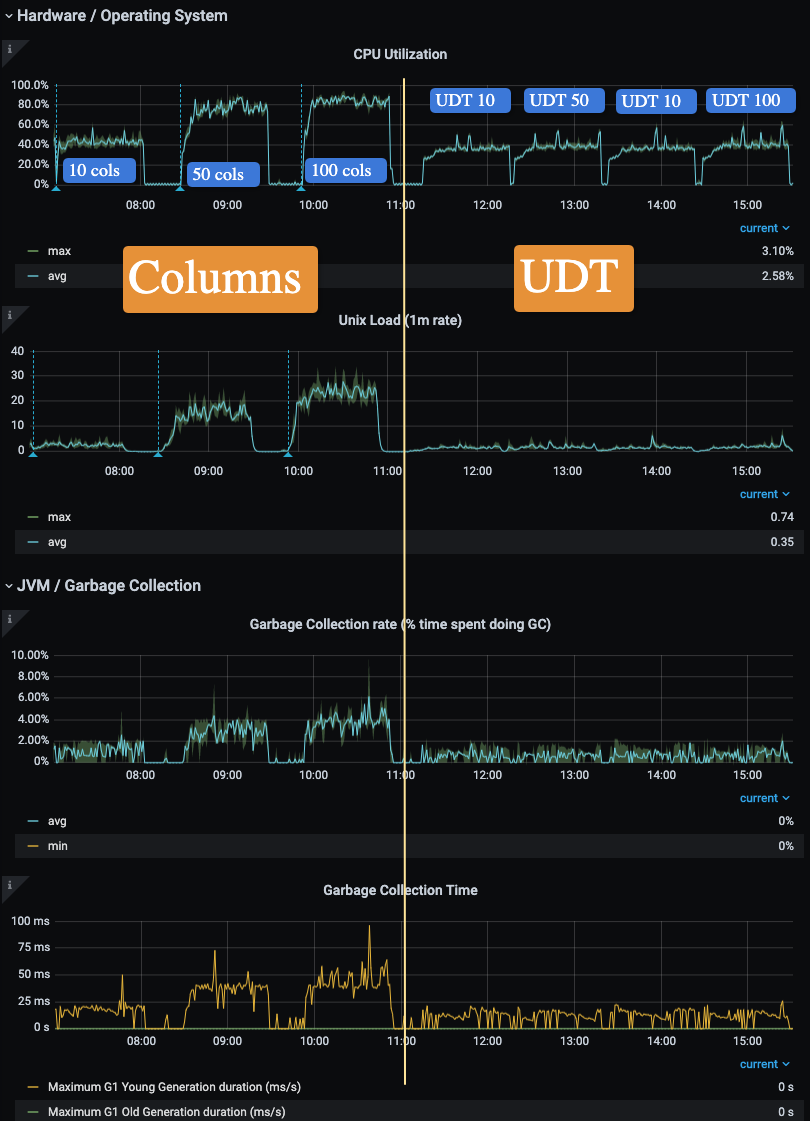

Looking at the CPU and GC metrics, we could see how much they were impacted by the different workloads:

The 50 and 100 columns tests reached a mostly constant 80% CPU utilization, while all UDT workloads remained around 40% like the 10 columns test did.

GC activity was significantly higher in the 50 and 100 columns tests as well compared to the UDT ones.

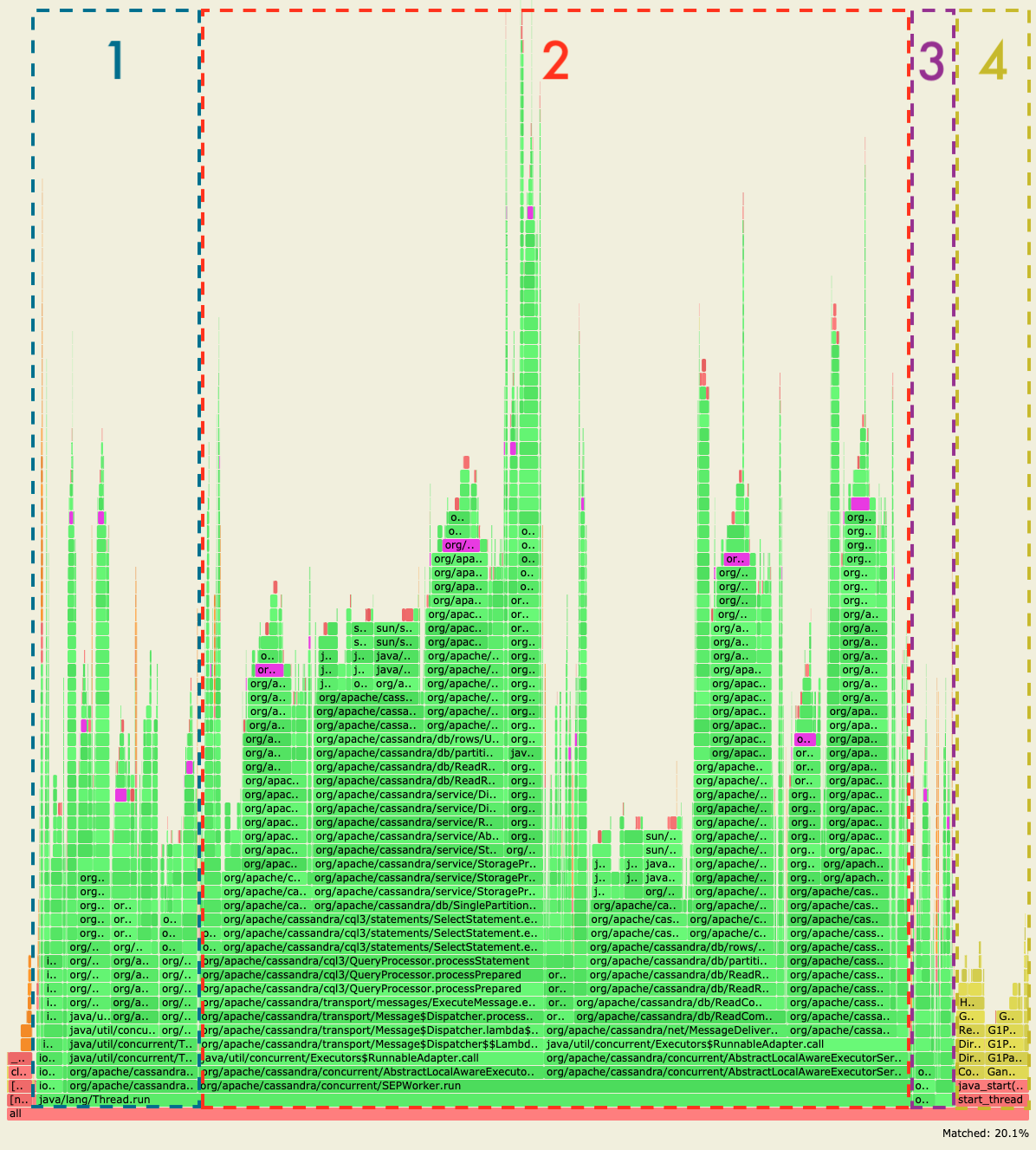

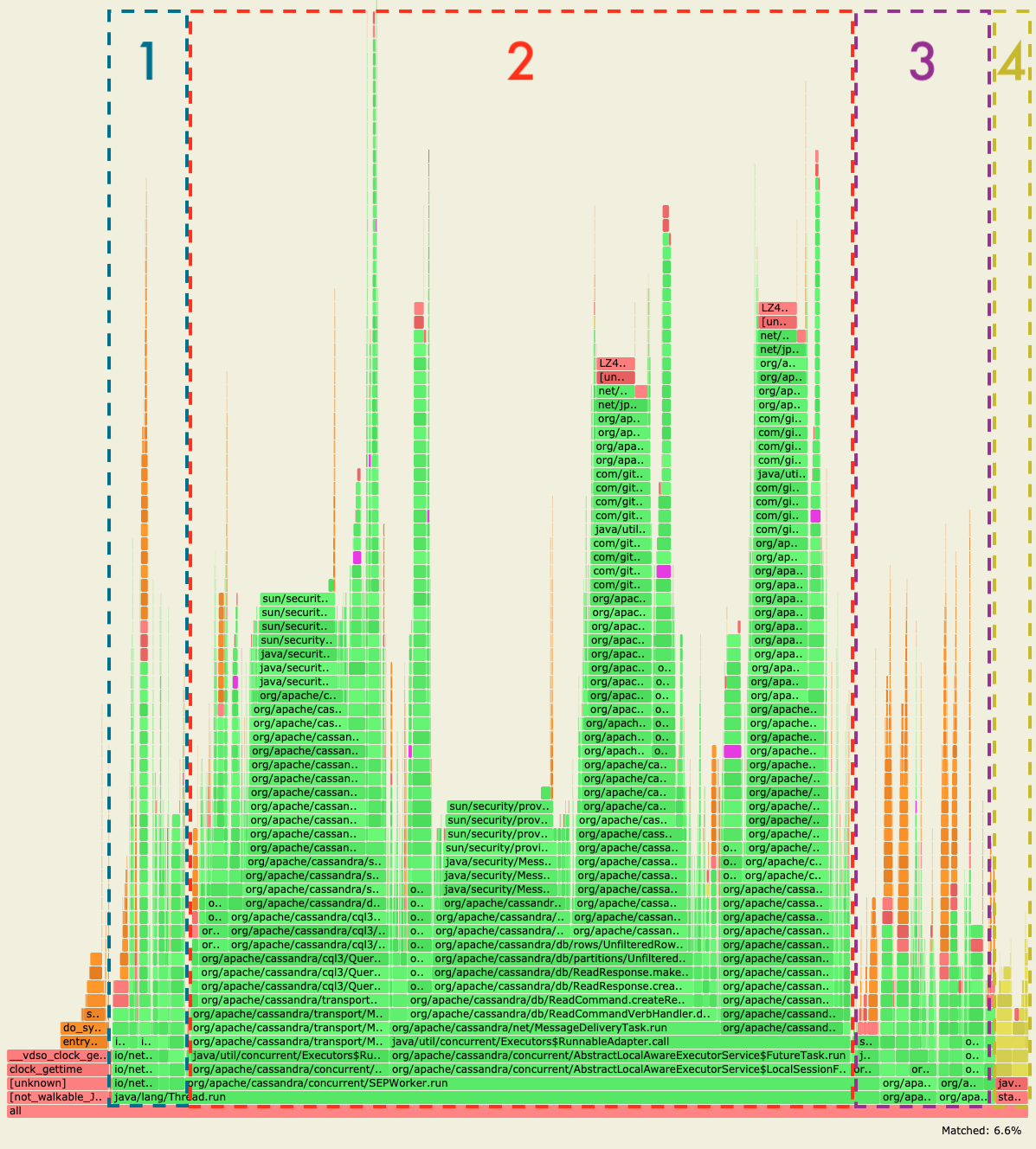

We generated flamegraphs during the 100 columns and the 100 fields UDT test runs to evaluate the impact on Cassandra’s internals.

100 columns flamegraph

100 columns flamegraph

100 fields UDT flamegraph

100 fields UDT flamegraph

The stacks in area 1 show the memtable flush and compaction activity. Area 2 covers the read path, including read repairs and area 3 covers the write path. Area 4 contains GC activity.

These images were captured while searching for Cell$Serializer which highlighted the purple cells and gave the match result that can be seen at the bottom of the image.

On the 100 columns test, we can see that Cell serialization was accounted for 20% of the CPU time spent during the observation window, while it was just 6.6% on the UDT test. In the 100 columns test also, we can see that the compactions, reads and GC activity starved the available resources, impacting the write path and explaining why the test could not reach the requested throughput. Here are the links to the original flamegraphs for exploration purposes:

The impact of the number of cells on internal operations is significant and also has a big overhead on GC operations. Each cell is an object on the heap that will need to be garbage collected eventually. The more objects, the longer the collections, while storing large objects has less impact (to some extent). Native allocations can start hitting concurrency issues when working with lots of tiny objects (primitives).

Each cell also carries specific metadata such as the write timestamp, an optional TTL and an optional deletion timestamp, which are more java objects to allocate and eventually garbage collect.

UDT in Cassandra 3.0.x

Non frozen UDTs were introduced in Cassandra 3.6 and were never backported to the 3.0 branch (as it was only receiving bug fixes). One unforeseen impact here is that a serialization header is missing from the 3.0 SSTables using UDTs, making 3.11 and 4.0 unable to read these UDTs as they will be interpreted as being non frozen. See CASSANDRA-15035 for more information.

The only fix for this issue is to run an offline scrub of all SSTables, meaning that Cassandra needs to be stopped during the operation, using the sstablescrub tool.

Hence we highly recommend against the use of UDTs before Cassandra 3.11.

Conclusion

Data models using immutable writes shouldn’t underestimate the efficiency of key/value-like data models, using a limited number of columns to store information. Alternate data types can be used to store data in a structured fashion in Cassandra while limiting the number of cells. Frozen UDTs should not be used if fields need to be updated individually but they will be a great fit for immutable writes and global updates.

Using UDTs is not recommended before Cassandra 3.11 due to upgrade bugs affecting 3.0 SSTables. Alternate data structures should be considered instead such as JSON, Avro or Protobuf, serialized into text or bytes columns.

Latencies can be vastly improved by grouping columns that get written immutably, which could also help reducing the cluster costs by scaling down or scaling in.