Get Rid of Read Repair Chance

Apache Cassandra has a feature called Read Repair Chance that we always recommend our clients to disable. It is often an additional ~20% internal read load cost on your cluster that serves little purpose and provides no guarantees.

What is read repair chance?

The feature comes with two schema options at the table level: read_repair_chance and dclocal_read_repair_chance. Each representing the probability that the coordinator node will query the extra replica nodes, beyond the requested consistency level, for the purpose of read repairs.

The original setting read_repair_chance now defines the probability of issuing the extra queries to all replicas in all data centers. And the newer dclocal_read_repair_chance setting defines the probability of issuing the extra queries to all replicas within the current data center.

The default values are read_repair_chance = 0.0 and dclocal_read_repair_chance = 0.1. This means that cross-datacenter asynchronous read repair is disabled and asynchronous read repair within the datacenter occurs on 10% of read requests.

What does it cost?

Consider the following cluster deployment:

- A keyspace with a replication factor of three (

RF=3) in a single data center - The default value of

dclocal_read_repair_chance = 0.1 - Client reads using a consistency level of LOCAL_QUORUM

- Client is using the token aware policy (default for most drivers)

In this setup, the cluster is going to see ~10% of the read requests result in the coordinator issuing two messaging system queries to two replicas, instead of just one. This results in an additional ~5% load.

If the requested consistency level is LOCAL_ONE, which is the default for the java-driver, then ~10% of the read requests result in the coordinator increasing messaging system queries from zero to two. This equates to a ~20% read load increase.

With read_repair_chance = 0.1 and multiple datacenters the situation is much worse. With three data centers each with RF=3, then 10% of the read requests will result in the coordinator issuing eight extra replica queries. And six of those extra replica queries are now via cross-datacenter queries. In this use-case it becomes a doubling of your read load.

Let’s take a look at this with some flamegraphs…

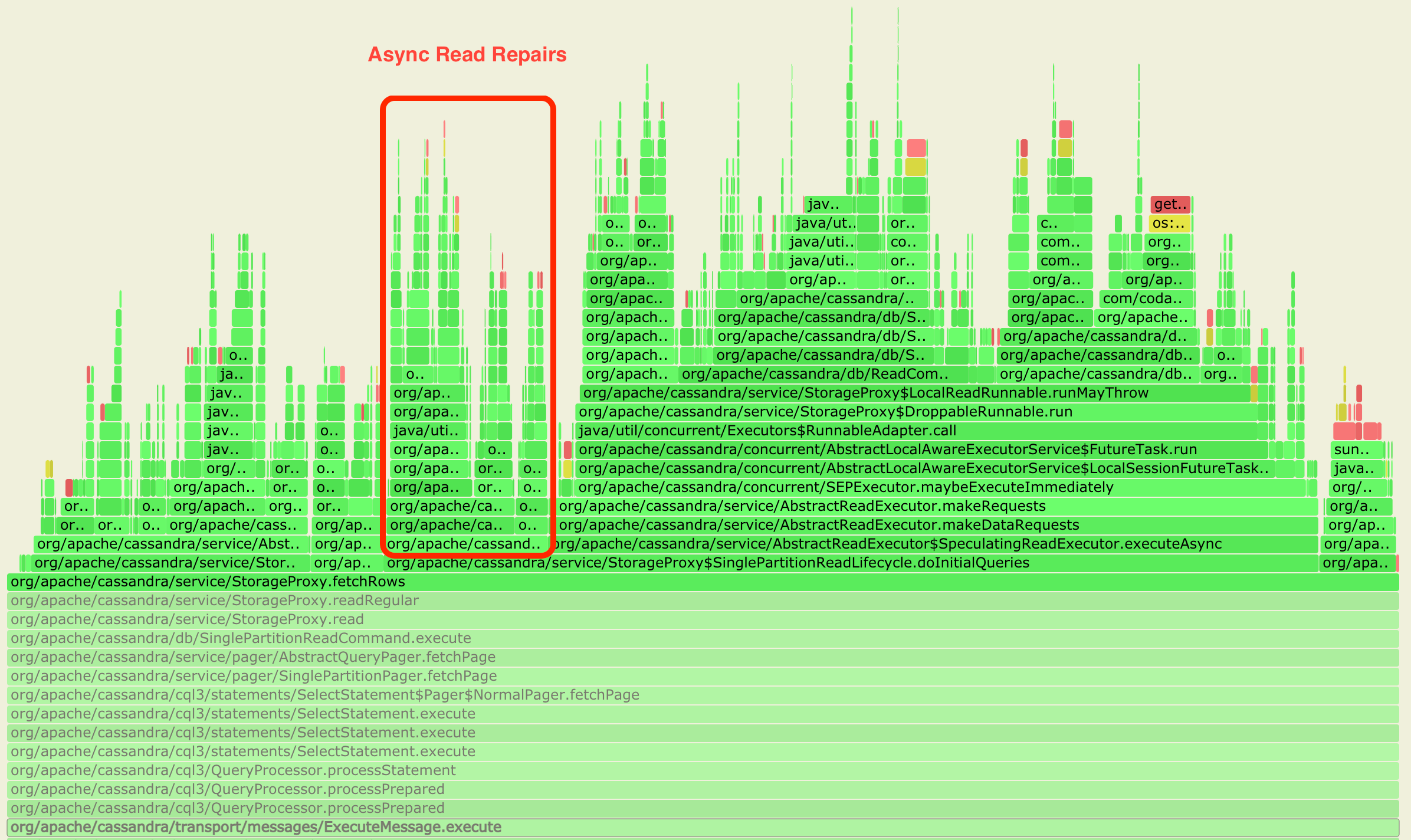

The first flamegraph shows the default configuration of dclocal_read_repair_chance = 0.1. When the coordinator’s code hits the AbstractReadExecutor.getReadExecutor(..) method, it splits paths depending on the ReadRepairDecision for the table. Stack traces containing either AlwaysSpeculatingReadExecutor, SpeculatingReadExecutor or NeverSpeculatingReadExecutor provide us a hint to which code path we are on, and whether either the read repair chance or speculative retry are in play.

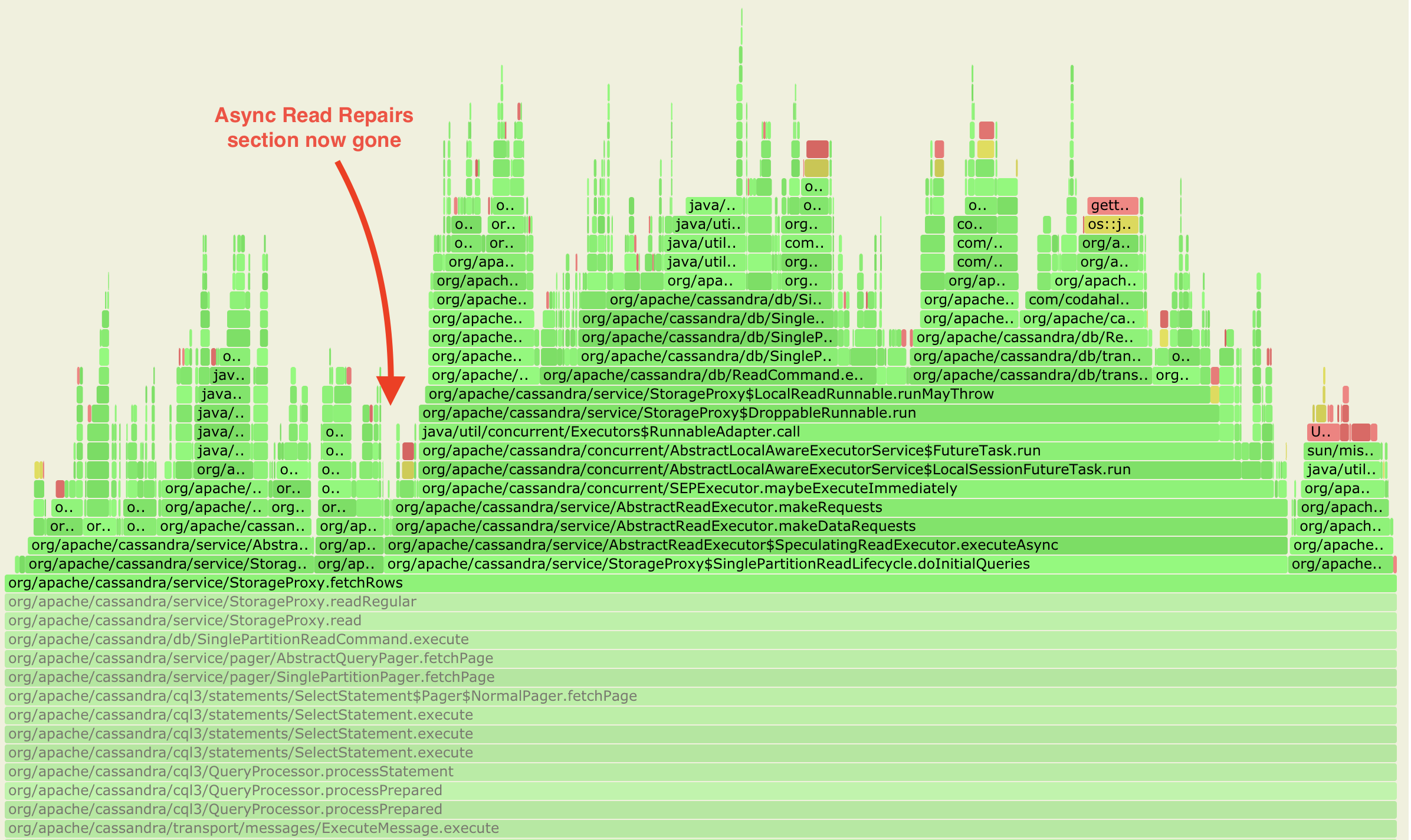

The second flamegraph shows the behaviour when the configuration has been changed to dclocal_read_repair_chance = 0.0. The AlwaysSpeculatingReadExecutor flame is gone and this demonstrates the degree of complexity removed from runtime. Specifically, read requests from the client are now forwarded to every replica instead of only those defined by the consistency level.

ℹ️ These flamegraphs were created with Apache Cassandra 3.11.9, Kubernetes and the cass-operator, nosqlbench and the async-profiler.

Previously we relied upon the existing tools of tlp-cluster, ccm, tlp-stress and cassandra-stress. This new approach with new tools is remarkably easy, and by using k8s the same approach can be used locally or against a dedicated k8s infrastructure. That is, I don't need to switch between ccm clusters for local testing and tlp-cluster for cloud testing. The same recipe applies everywhere. Nor am I bound to AWS for my cloud testing. It is also worth mentioning that these new tools are gaining a lot of focus and momentum from DataStax, so the introduction of this new approach to the open source community is deserved.

The full approach and recipe to generating these flamegraphs will follow in a [subsequent blog post](/blog/2021/01/31/cassandra_and_kubernetes_cass_operator.html).

What is the benefit of this additional load?

The coordinator returns the result to the client once it has received the response from one of the replicas, per the user’s requested consistency level. This is why we call the feature asynchronous read repairs. This means that read latencies are not directly impacted though the additional background load will indirectly impact latencies.

Asynchronous read repairs also means that there’s no guarantee that the response to the client is repaired data. In summary, 10% of the data you read will be guaranteed to be repaired after you have read it. This is not a guarantee clients can use or rely upon. And it is not a guarantee Cassandra operators can rely upon to ensure data at rest is consistent. In fact it is not a guarantee an operator would want to rely upon anyway, as most inconsistencies are dealt with by hints and nodes down longer than the hint window are expected to be manually repaired.

Furthermore, systems that use strong consistency (i.e. where reads and writes are using quorum consistency levels) will not expose such unrepaired data anyway. Such systems only need repairs and consistent data on disk for lower read latencies (by avoiding the additional digest mismatch round trip between coordinator and replicas) and ensuring deleted data is not resurrected (i.e. tombstones are properly propagated).

So the feature gives us additional load for no usable benefit. This is why disabling the feature is always an immediate recommendation we give everyone.

It is also the rationale for the feature being removed altogether in the next major release, Cassandra version 4.0. And, since 3.0.17 and 3.11.3, if you still have values set for these properties in your table, you may have noticed the following warning during startup:

dclocal_read_repair_chance table option has been deprecated and will be removed in version 4.0

Get Rid of It

For Cassandra clusters not yet on version 4.0, do the following to disable all asynchronous read repairs:

cqlsh -e 'ALTER TABLE <keyspace_name>.<table_name> WITH read_repair_chance = 0.0 AND dclocal_read_repair_chance = 0.0;'

When upgrading to Cassandra 4.0 no action is required, these settings are ignored and disappear.