Running tlp-stress in Kubernetes

Performance tuning and benchmarking is key to the successful operation of Cassandra. We have a great tool in tlp-stress that makes benchmarking a lot easier. I have been exploring running Cassandra in Kubernetes for a while now. At one point I thought to myself, it would be nice to be able to utilize tlp-stress in Kubernetes. After a bit of prototyping, I decided that I would write an operator. This article introduces the Kubernetes operator for tlp-stress, stress-operator.

Before getting into the details of stress-operator, let’s consider the following question: What exactly is a Kubernetes operator?

Kubernetes has a well-defined REST API with lots of built-in resource types like Pods, Deployments, and Jobs. The API for creating these built-in objects is declarative. Users typically create objects using the tool kubectl and YAML files. A controller is code that executes a control loop watching one or more of these resource types. A controller’s job is to ensure that an object’s actual state matches its expected state.

An operator extends Kubernetes with custom resource definitions (CRDs) and custom controllers. A CRD provides domain specific abstractions. The custom controller provides automation that is tailored around those abstractions.

If the concept of an operator is still a bit murky, don’t worry. It will get clearer as we look at examples of using stress-operator that hightlight some of its features including:

- Configuring and deploying tlp-stress

- Provisioning Cassandra

- Monitoring with Prometheus and Grafana

Installing the Operator

You need to have kubectl installed. Check out the official Kubernetes docs if you do not already have it installed.

Lastly, you need access to a running Kubernetes cluster. For local development, my tool of choice is kind.

Download the following manifests:

stress-operator.yaml declares all of the resources necessary to install and run the operator. The other files are optional dependencies.

casskop.yaml installs the Cassandra operator casskop which stress-operator uses to provision Cassandra.

grafana-operator.yaml and prometheus-operator.yaml install grafana-operator and prometheus-operator respectively. stress-operator uses them to install, configure, and monitor tlp-stress.

Install the operator along with the optional dependencies as follows:

$ kubectl apply -f stress-operator.yaml

$ kubectl apply -f casskop.yaml

$ kubectl apply -f grafana-operator.yaml

$ kubectl apply -f prometheus-operator.yaml

The above commands install CRDs as well as the operators themselves. There should be three CRDs installed for stress-operator. We can verify this as follows:

$ kubectl get crds | grep thelastpickle

cassandraclustertemplates.thelastpickle.com 2020-02-26T16:10:00Z

stresscontexts.thelastpickle.com 2020-02-26T16:10:00Z

stresses.thelastpickle.com 2020-02-26T16:10:00Z

Lastly, verify that each of the operators is up and running:

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

cassandra-k8s-operator 1/1 1 1 6h5m

grafana-deployment 1/1 1 1 4h35m

grafana-operator 1/1 1 1 6h5m

stress-operator 1/1 1 1 4h51m

Note: The prometheus-operator is currently installed with cluster-wide scope in the prometheus-operator namespace.

Configuring and Deploying a Stress Instance

Let’s look at an example of configuring and deploying a Stress instance. First, we create a KeyValue workload in a file named key-value-stress.yaml:

apiVersion: thelastpickle.com/v1alpha1

kind: Stress

metadata:

name: key-value

spec:

stressConfig:

workload: KeyValue

partitions: 25m

duration: 60m

readRate: "0.3"

consistencyLevel: LOCAL_QUORUM

replication:

networkTopologyStrategy:

dc1: 3

partitionGenerator: sequence

cassandraConfig:

cassandraService: stress

Each property under stressConfig corresponds to a command line option for tlp-stress.

The cassandraConfig section is Kubernetes-specific. When you run tlp-stress (outside of Kubernetes) it will try to connect to Cassandra on localhost by default. You can override the default behavior with the --host option. See the tlp-stress docs for more information about all its options.

In Kubernetes, Cassandra should be deployed using StatefulSets. A StatefulSet requires a headless Service. Among other things, a Service maintains a list of endpoints for the pods to which it provides access.

The cassandraService property specifies the name of the Cassandra cluster headless service. It is needed in order for tlp-stress to connect to the Cassandra cluster.

Now we create the Stress object:

$ kubectl apply -f key-value-stress.yaml

stress.thelastpickle.com/key-value created

# Query for Stress objects to verify that it was created

$ kubectl get stress

NAME AGE

key-value 4s

Under the hood, stress-operator deploys a Job to run tlp-stress.

$ kubectl get jobs

NAME COMPLETIONS DURATION AGE

key-value 0/1 4s 4s

We can use a label selector to find the pod that is created by the job:

$ kubectl get pods -l stress=key-value,job-name=key-value

NAME READY STATUS RESTARTS AGE

key-value-pv6kz 1/1 Running 0 3m20s

We can monitor the progress of tlp-stress by following the logs:

$ kubectl logs -f key-value-pv6kz

Note: If you are following the steps locally, the Pod name will have a different suffix.

Later we will look at how we monitor tlp-stress with Prometheus and Grafana.

Cleaning Up

When you are ready to delete the Stress instance, run:

$ kubectl delete stress key-value

The above command deletes the Stress object as well as the underlying Job and Pod.

Provisioning a Cassandra Cluster

stress-operator provides the ability to provision a Cassandra cluster using casskop. This is convenient when you want to quickly to spin up a cluster for some testing.

Let’s take a look at another example, time-series-casskop-stress.yaml:

apiVersion: thelastpickle.com/v1alpha1

kind: Stress

metadata:

name: time-series-casskop

spec:

stressConfig:

workload: BasicTimeSeries

partitions: 50m

duration: 60m

readRate: "0.45"

consistencyLevel: LOCAL_QUORUM

replication:

networkTopologyStrategy:

dc1: 3

ttl: 300

cassandraConfig:

cassandraClusterTemplate:

metadata:

name: time-series-casskop

spec:

baseImage: orangeopensource/cassandra-image

version: 3.11.4-8u212-0.3.1-cqlsh

runAsUser: 1000

dataCapacity: 10Gi

imagepullpolicy: IfNotPresent

deletePVC: true

maxPodUnavailable: 0

nodesPerRacks: 3

resources:

requests:

cpu: '1'

memory: 1Gi

limits:

cpu: '1'

memory: 1Gi

topology:

dc:

- name: dc1

rack:

- name: rack1

This time we are running a BasicTimeSeries workload with a TTL of five minutes.

In the cassandraConfig section we declare a cassandraClusterTemplate instead of a cassandraService. CassandraCluster is a CRD provided by casskop. With this template we are creating a three-node cluster in a single rack.

We won’t go into any more detail about casskop for now. It is beyond the scope of this post.

Here is what happens when we run kubectl apply -f time-series-casskop-stress.yaml:

- We create the

Stressobject - stress-operator creates the

CassandraClusterobject specified incassandraClusterTemplate - casskop provisions the Cassandra cluster

- stress-operator waits for the Cassandra cluster to be ready (in a non-blocking manner)

- stress-operator creates the Job to run tlp-stress

- tlp-stress runs against the Cassandra cluster

There is another benefit of this approach in addition to being able to easily spin up a cluster. We do not have to implement any steps to wait for the cluster to be ready before running tlp-stress. The stress-operator takes care of this for us.

Cleaning Up

When you are ready to delete the Stress instance, run:

$ kubectl delete stress time-series-casskop

The deletion does not cascade to the CassandraCluster object. This is by design.

If you want to rerun the same Stress instance (or a different one that uses reuses the same Cassandra cluster), the stress-operator reuses the Cassandra cluster if it already exists.

Run the following to delete the Cassandra cluster:

$ kubectl delete cassandracluster time-series-casskop

Monitoring with Prometheus and Grafana

The stress-operator integrates with Prometheus and Grafana to provide robust monitoring. Earlier we installed grafana-operator and prometheus-operator. They, along with casskop, are optional dependencies. It is entirely possible to integrate with Prometheus and Grafana instances that were installed by means other than the respective operators.

If you want stress-operator to provision Prometheus and Grafana, then the operators must be installed.

There is an additional step that is required for stress-operator to automatically provision Prometheus and Grafana. We need to create a StressContext. Let’s take a look at stresscontext.yaml:

# There should only be one StressContext per namespace. It must be named

# tlpstress; otherwise, the controller will ignore it.

#

apiVersion: thelastpickle.com/v1alpha1

kind: StressContext

metadata:

name: tlpstress

spec:

installPrometheus: true

installGrafana: true

Let’s create the StressContext:

$ kubectl apply -f stresscontext.yaml

Creating the StressContext causes stress-operator to perform several actions including:

- Configure RBAC setttings so that Prometheus can scrape metrics

- Create a

Prometheuscustom resource - Expose Prometheus with a Service

- Create a

ServiceMonitorcustom resource which effectively tells Prometheus to monitor tlp-stress - Create a

Grafanacustom resource - Expose Grafana with a Service

- Create and configure a Prometheus data source in Grafana

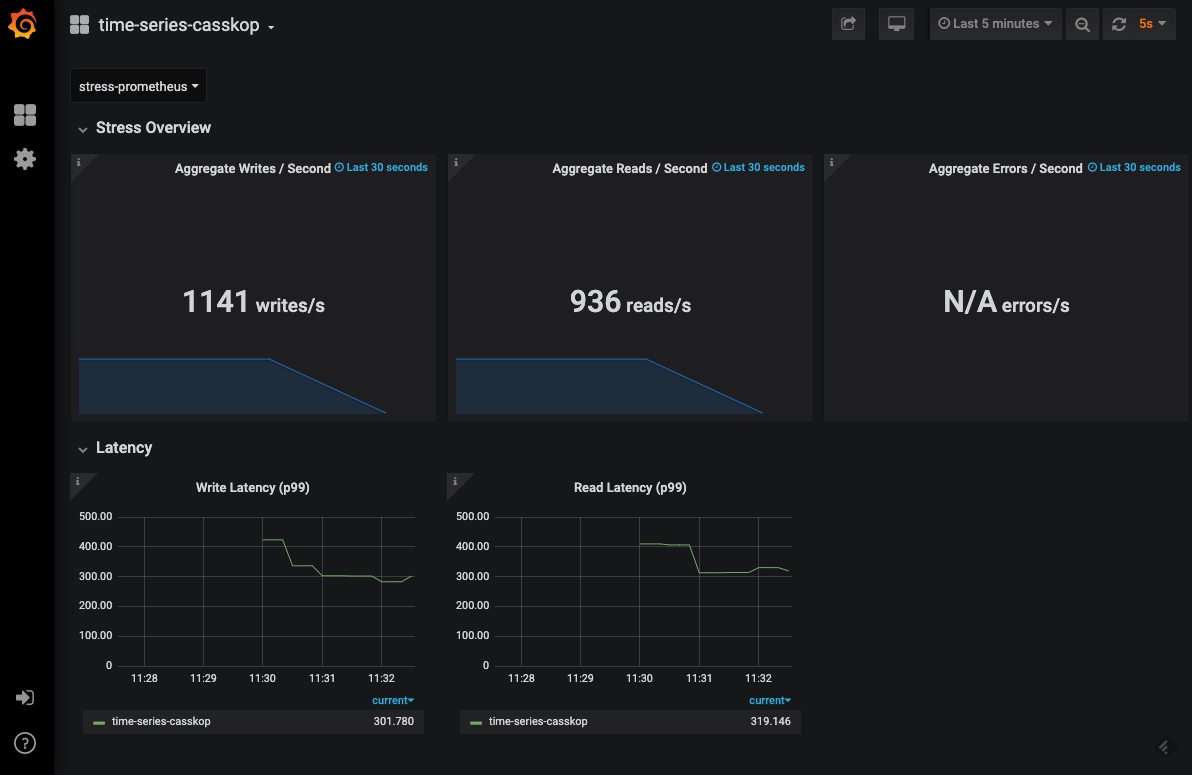

Now when we create a Stress instance, stress-operator will now also create a Grafana dashboard for the tlp-stress job. We can test this with time-series-casskop-stress.yaml. The dashboard name will be the same as the name as the Stress instance, which in this example is time-series-casskop.

Note: To re run the job we need to delete and recreate the Stress instance.

$ kubectl delete stress times-series-casskop

$ kubectl apply -f time-series-casskop-stress.yaml

Note: You do not need to delete the CassandraCluster. The stress-operator will simply reuse it.

Now we want to check out the Grafana dashboard. There are different ways of accessing a Kubernetes service from outside the cluster. We will use kubectl port-forward.

Run the following to make Grafana accessible locally:

$ kubectl port-forward svc/grafana-service 3000:3000

Forwarding from 127.0.0.1:3000 -> 3000

Forwarding from [::1]:3000 -> 3000

Handling connection for 3000

Then in your browser go to http://localhost:3000/. It should direct you to the Home dashboard. Click on the Home label in the upper left part of the screen. You should see a row for the time-series-casskop dashboard. Click on it. The dashboard should look something like this:

Cleaning Up

Delete the StressContext with:

$ kubectl delete stresscontext tlpstress

The deletion does not cascade to the Prometheus or Grafana objects. To delete them and their underlying resources run:

$ kubectl delete prometheus stress-prometheus

$ kubectl delete grafana stress-grafana

Wrap Up

This concludes the brief introduction to stress-operator. The project is still in early stages of development and as such undergoing lots of changes and improvements. I hope that stress-operator makes testing Cassandra in Kubernetes a little easier and more enjoyable!